URAP

Ranking by Academic Performace

The URAP ranking forms part of the Turkish modernisation and excellence projects. As such it is oriented towards comparing and building the capacity of MENA institutions compared to their global counterparts.

The URAP ranking system’s focus is on academic quality. URAP has gathered data about 2,500 Higher Education Institutes (HEI) in an effort to rank these organizations by their academic performance. The ranking includes HEIs except for governmental academic institutions, e.g. the Chinese Academy of Science and the Russian Academy of Science, etc. Data for 2,500 HEIs have been processed and top 2,000 of them are scored. Thus, URAP covers approximately 10% of all HEIs in the world, which makes it one of the most comprehensive university ranking systems in the world.

Funding and compilation

URAP is a nonprofit organization. The team members are researchers in METU who voluntarily work at the URAP Research Laboratory as a public service. University Ranking by Academic Performance (URAP) Research Laboratory was established at Informatics Institute of Middle East Technical University in 2009. Main objective of URAP is to develop a ranking system for the world universities based on academic performances which determined by quality and quantity of scholarly publications. In line with this objective yearly World Ranking of 2000 Higher Education Institutions have been released since 2010.

| Area | Metric | Weighting | Source |

|---|---|---|---|

| ARTICLE | is a measure of current scientific productivity which includes articles published in 2013 and indexed by Web of Science and listed by Incites. Article number covers articles, reviews and notes. The weight of this indicator on the overall ranking is %21. | 21% | Web of Science SCI and SSCI |

| CITATION | is a measure of research impact and scored according to the total number of citations received in 2011-2013 for the articles published in 2011-2013 and indexed by Web of Science. The effect of citation on the overall ranking is %21. | 21% | Web of Science SCI and SSCI |

| TOTAL DOCUMENT | is the measure of sustainability and continuity of scientific productivity and presented by the total document count which covers all scholarly literature including conference papers, reviews, letters, discussions, scripts in addition to journal articles published during 2011-2013 period. The weight of this indicator is %10. | 10% | Web of Science SCI and SSCI |

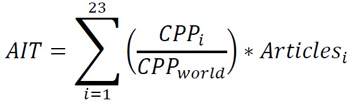

| ARTICLE IMPACT TOTAL (AIT) | is a measure of scientific productivity corrected by the institution’s normalized CPP(1) with respect to the world CPP in 23 subject areas between 2011 and 2013. The ratio of the institution’s CPP and the world CPP indicates whether the institution is performing above or below the world average in that field. This ratio is multiplied by the number of publications in that field and then summed across the 23 fields. which is summarized in the following formula:  This indicator aims to balance the institution’s scientific productivity with the field normalized impact generated by those publications in each field. | 18% | Web of Science SCI and SSCI |

| CITATION IMPACT TOTAL | is a measure of research impact corrected by the institution’s normalized CPP with respect to the world CPP in 23 subject areas between 2011 and 2013. The ratio of the institution’s CPP and the world CPP indicates whether the institution is performing above or below the world average in that field. This ratio is multiplied by the number of citations in that field and then summed across the 23 fields. This indicator aims to balance the institution’s scientific impact with the field normalized impact generated by the publications in each field, , which is summarized in the following formula: | 15% | Web of Science SCI and SSCI |

| INTERNATIONAL COLLABORATION | is a measure of global acceptance of a university. International collaboration data, which is based on the total number of publications made in collaboration with foreign universities, is obtained from InCites for the years 2011-2013. The weight of this indicator is %15 in the overall ranking. The 23 subject areas used in the ranking are based on the discipline classification matrix developed by the Australian Research Council for journals indexed in Web of Science(3 | 15% | Web of Science SCI and SSCI |

Data Collection

Data are gathered from Web of Science and other sources which provide lists of HEIs. 2500 HEIs with highest number of publications were initially considered, and 2000 of them were ranked after data processing. Statistical analysis indicated that the raw bibliometric data underlying our indicators had highly skewed distributions. Therefore, the indicator values above and below the median are linearly scored in two groups. The Delphi system was conducted with a group of experts to assign weighting scores to the indicators. Total score of 600 is distributed to each indicator